In this lab, we will investigate the impact of different algorithms which produce the same effect.

We will test and select one of three algorithms for adjusting the volume of PCM audio sample based on benchmarking.

vol1.c : the basic or Navie algorithm

This approach multiplies each sound sample by 0.75, casting from signed 16-bit integer to floating point and back again. Casting between integer and floating point can be expensive operations.

vol2.c: A lookup-based algorithm

This approach uses a pre-calculated table of all 65536 possible results, and looks up each sample in that table instead of multiplying.

vol3.c: A fixed-point algorithm

This approach uses fixed-point math and bit shifting to perform the multiplication without using floating-point math.

Make a prediction

we will process with 500000000 samples.

When I read the code vol3 and vol2 is similar and vol1 is most slow.

most of part of code is similar but, the "interesting part" slightly different.

In case of vol1.c, using the "data[x] = scale_sample(data[x], 0.75);" in the loop. However vol3.c using this calculation code "vf = 0.75 * 65536;" out of the loop and use the variable "vf" in the loop.

Here id the code we have for vol1.c

// Function to scale a sound sample using a volume_factor

// in the range of 0.00 to 1.00.

static inline int16_t scale_sample(int16_t sample, float volume_factor) {

return (int16_t) (volume_factor * (float) sample);

}

int main() {

// Allocate memory for large data array

int16_t* data;

data = (int16_t*) calloc(SAMPLES, sizeof(int16_t));

int x;

int ttl = 0;

// Seed the pseudo-random number generator

srand(1);

// Fill the array with random data

clock_t start, stop;

int i;

start =clock();

for (x = 0; x < SAMPLES; x++) {

data[x] = (rand()%65536)-32768;

}

printf("\n\n");

stop = clock();

// ######################################

// This is the interesting part!

// Scale the volume of all of the samples

for (x = 0; x < SAMPLES; x++) {

data[x] = scale_sample(data[x], 0.75);

}

// ######################################

// Sum up the data

for (x = 0; x < SAMPLES; x++) {

ttl = (ttl+data[x])%1000;

}

// Print the sum

printf("Result: %d\n", ttl);

return 0;

}

Here id the code we have for vol1.c

[hskwon@bbetty algorithm_selection_lab]$ cat vol2.c

#include <stdlib.h>

#include <stdio.h>

#include <stdint.h>

#include "vol.h"

// Function to initialize the scaling table

static inline void scale_init(int16_t* table, float volume_factor) {

for (int i=-32768; i<32767; i++) {

table[(uint16_t) i] = ((float) i) * volume_factor;

}

return;

}

int main() {

// Allocate memory for large data array

int16_t* data;

data = (int16_t*) calloc(SAMPLES, sizeof(int16_t));

int x;

int ttl = 0;

int16_t table[65536];

// Seed the pseudo-random number generator

srand(1);

// Fill the array with random data

for (x = 0; x < SAMPLES; x++) {

data[x] = (rand()%65536)-32768;

}

// ######################################

// This is the interesting part!

// Scale the volume of all of the samples

scale_init(table, 0.75);

for (x = 0; x < SAMPLES; x++) {

data[x] = table[(uint16_t) data[x]];

}

// ######################################

// Sum up the data

for (x = 0; x < SAMPLES; x++) {

ttl = (ttl+data[x])%1000;

}

// Print the sum

printf("Result: %d\n", ttl);

return 0;

}

Here id the code we have for vol1.c

[hskwon@bbetty algorithm_selection_lab]$ cat vol3.c

#include <stdlib.h>

#include <stdio.h>

#include <stdint.h>

#include "vol.h"

int main() {

// Allocate memory for large data arrray

int16_t* data;

data = (int16_t*) calloc(SAMPLES, sizeof(int16_t));

int x;

int ttl = 0;

int vf;

int16_t table[65536];

// Seed the pseudo-random number generator

srand(1);

// Fill the array with random data

for (x = 0; x < SAMPLES; x++) {

data[x] = (rand()%65536)-32768;

}

// ######################################

// This is the interesting part!

// Scale the volume of all of the samples

vf = 0.75 * 65536;

for (x = 0; x < SAMPLES; x++) {

data[x] = (data[x] * vf) >>16;

}

// ######################################

// Sum up the data

for (x = 0; x < SAMPLES; x++) {

ttl = (ttl+data[x])%1000;

}

// Print the sum

printf("Result: %d\n", ttl);

return 0;

}

Build and test each of the programs

Find a way to measure performance without the time taken to perform the test setup pre-processing (generating the samples) and post-processing (summing the results) so that you can measure only the time taken to scale the samples.

I used below with #include <sys/time.h> .

Change the number of samples so that each program takes a reasonable amount of time to execute

* sample: 500,000,000

|

Arcitecture

|

|

1st

|

2nd

|

3rd

|

|

Aarch

64 - bbetty

|

Vol1

|

934.655029

|

935.343994

|

934.682007

|

|

Vol2

|

481.979004

|

479.429993

|

480.885986

|

|

|

Vol3

|

782.955017

|

782.963013

|

782.968018

|

|

|

X84-64

: xerxes

|

Vol1

|

342.062012

|

341.799988

|

342.772003

|

|

Vol2

|

273.645020

|

274.656006

|

273.539001

|

|

|

Vol3

|

212.174011

|

212.164993

|

212.121002

|

|

Arcitecture

|

|

1st

|

2nd

|

3rd

|

|

Aarch

64 - bbetty

|

Vol1

|

1871.629028

|

1871.630981

|

1870.562012

|

|

Vol2

|

85.615005

|

76.682007

|

73.014008

|

|

|

Vol3

|

1568.022949

|

1568.958008

|

1568.334961

|

|

|

X84-64

: xerxes

|

Vol1

|

682.671997

|

682.812988

|

683.776978

|

|

Vol2

|

546.969971

|

581.843018

|

548.658020

|

|

|

Vol3

|

425.340027

|

424.556030

|

425.140991

|

* sample: 2,000,000,000

|

Arcitecture

|

|

1st

|

2nd

|

3rd

|

|

Aarch

64 - bbetty

|

Vol1

|

3743.591064

|

3743.652100

|

3744.966064

|

|

Vol2

|

1099.267090

|

1089.684082

|

1064.688965

|

|

|

Vol3

|

3138.756104

|

3138.928955

|

3138.953125

|

|

|

X84-64

: xerxes

|

Vol1

|

1401.909912

|

1523.831055

|

1409.390015

|

|

Vol2

|

1119.677002

|

1138.452026

|

1105.764038

|

|

|

Vol3

|

874.038025

|

848.578003

|

873.478027

|

vol1 is most slow and vol2 is most fast in Aarch64 server. In xerxes server, vol3 is most fast and vol1 is most slow. When the number of samples was doubled (500,000,000 -> 1,000,000,000), the speed was also doubled. However, When the number of samples was increased 4 times(50,000,000 - > 20,000,000), the speed was also 4 times slower exept vol2.

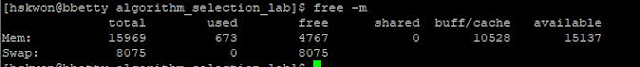

Memory usage of each program

Aarch64 : bbetty

X86-64 : xerxes

What I learn..

Even if we have same file, the running speed is different depending on the server.

No comments:

Post a Comment